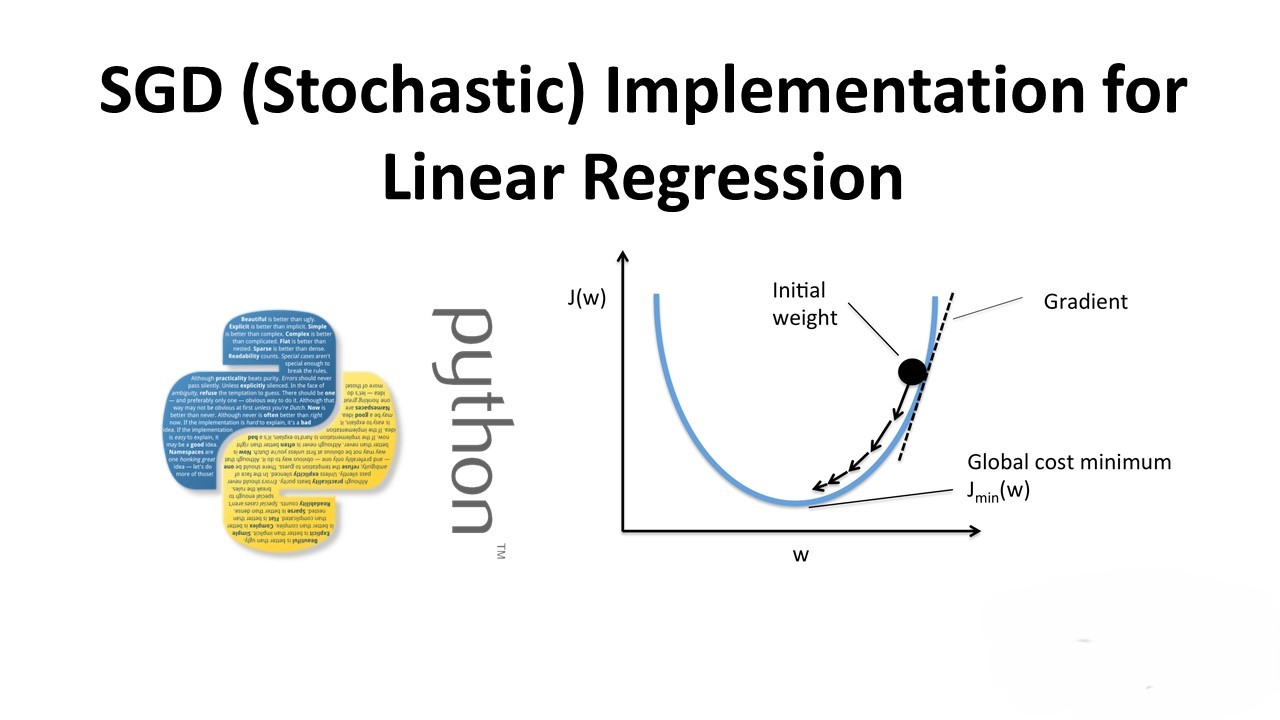

In this post, we’ll explore the implementation of Stochastic Gradient Descent (SGD) for Linear Regression on the Boston House dataset. We’ll compare our custom implementation with the SGD implementation provided by the popular machine learning library, scikit-learn.

Importing Libraries

import warnings

warnings.filterwarnings("ignore")

from sklearn.datasets import load_boston

from sklearn import preprocessing

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from prettytable import PrettyTable

from sklearn.linear_model import SGDRegressor

from sklearn.metrics import mean_squared_error

from numpy import random

from sklearn.model_selection import train_test_split

Data Loading and Preprocessing

We load the Boston House dataset, standardize the data, and split it into training and testing sets.

boston_data = pd.DataFrame(load_boston().data, columns=load_boston().feature_names)

Y = load_boston().target

X = load_boston().data

x_train, x_test, y_train, y_test = train_test_split(X, Y, test_size=0.3, random_state=123)

scaler = preprocessing.StandardScaler().fit(x_train)

x_train = scaler.transform(x_train)

x_test = scaler.transform(x_test)

SKLearn Implementation of SGD

We start by implementing SGD using scikit-learn.

model = SGDRegressor(max_iter=1000, tol=1e-3)

model.fit(x_train, y_train)

y_pred_sksgd = model.predict(x_test)

MSE for the SKLearn SGD

mse_sksgd = mean_squared_error(y_test, y_pred_sksgd)

print("MSE for SKLearn SGD:", mse_sksgd)

Obtaining Weights from SKLearn SGD

sklearn_w = model.coef_

print("Weights from SKLearn SGD:", sklearn_w)

Custom Implementation of SGD

We then implement our custom SGD for linear regression.

def MyCustomSGD(train_data, learning_rate, n_iter, k, divideby):

# Implementation code

return w, b, elist

# Setting custom parameters

w, b, elist = MyCustomSGD(train_data, learning_rate=0.01, n_iter=1000, divideby=2, k=10)

y_pred_customsgd = predict(x_test, w, b)

Plot and MSE for the Custom SGD

plt.scatter(y_test, y_pred_customsgd)

plt.grid()

plt.xlabel('Actual y')

plt.ylabel('Predicted y')

plt.title('Scatter plot from actual y and predicted y for Custom SGD')

plt.show()

mse_customsgd = mean_squared_error(y_test, y_pred_customsgd)

print("Mean Squared Error for Custom SGD:", mse_customsgd)

Obtaining Weights from Custom SGD

custom_w = w

print("Weights from Custom SGD:", custom_w)

Conclusion

We compared the performance of our custom SGD implementation with the scikit-learn SGD implementation. Our custom implementation initially performed poorly but improved significantly when we adjusted the learning rate and batch size. Overall, with proper tuning, our custom SGD approach can achieve similar performance to the scikit-learn implementation.

Leave a Reply