In the realm of unsupervised learning, autoencoders stand out as powerful tools for data representation and feature learning. These neural networks are adept at capturing complex patterns in data, making them invaluable for tasks like dimensionality reduction, anomaly detection, and data denoising. Let’s delve into the inner workings of autoencoders and explore their practical applications.

Understanding Autoencoders

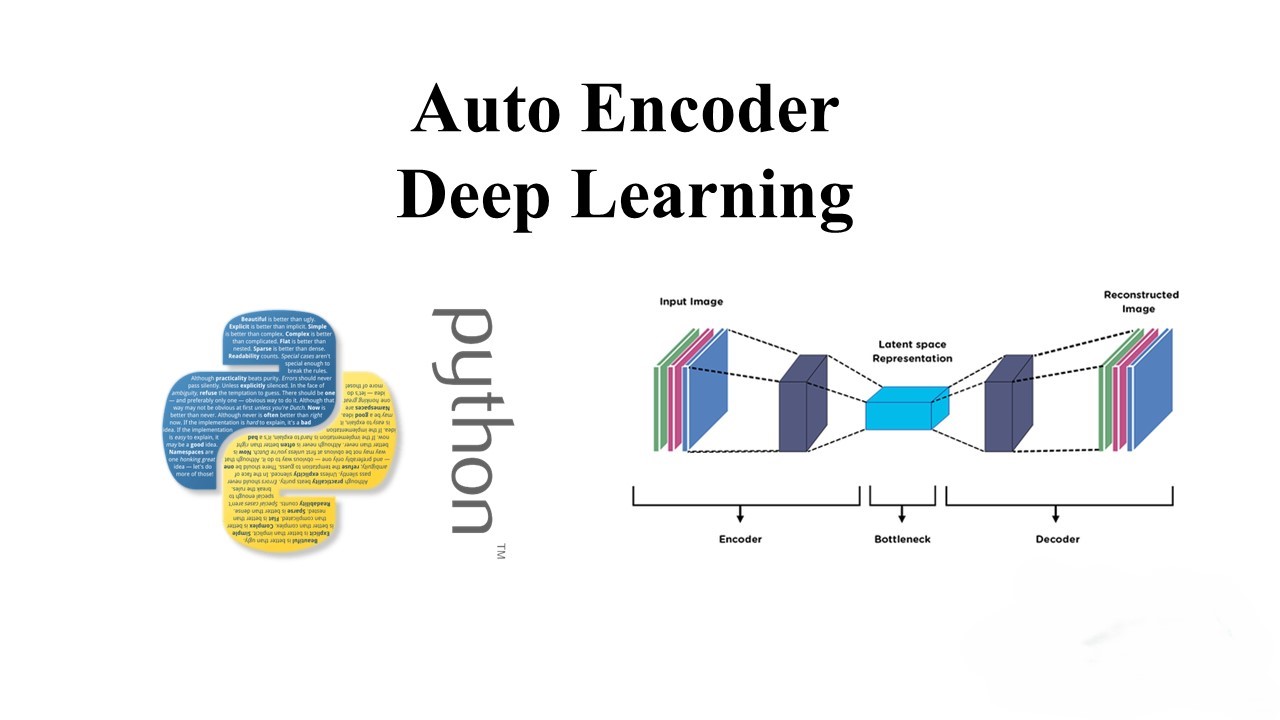

At its core, an autoencoder aims to reconstruct its input data at the output layer, using a compressed representation (latent space) in between. The network consists of an encoder, which compresses the input into the latent space, and a decoder, which reconstructs the input from the latent space representation. By training the autoencoder to minimize the reconstruction error, it learns to capture the most salient features of the data.

Applications of Autoencoders

- Dimensionality Reduction: Autoencoders can learn a compact representation of high-dimensional data, enabling efficient storage and computation. This is particularly useful in scenarios with large datasets or limited computational resources.

- Anomaly Detection: By learning the normal patterns in data, autoencoders can identify anomalies or outliers that deviate significantly from the norm. This makes them valuable for fraud detection, cybersecurity, and quality control.

- Data Denoising: Autoencoders can reconstruct clean data from noisy inputs, effectively denoising the data. This is beneficial in scenarios where the data is corrupted or contains irrelevant information.

Implementing an Autoencoder in Python

Here’s a simple example of building and training an autoencoder using TensorFlow and Keras:

import tensorflow as tf

from tensorflow.keras.layers import Input, Dense

from tensorflow.keras.models import Model

# Define the encoder

input_layer = Input(shape=(784,))

encoded = Dense(128, activation='relu')(input_layer)

encoded = Dense(64, activation='relu')(encoded)

# Define the decoder

decoded = Dense(128, activation='relu')(encoded)

decoded = Dense(784, activation='sigmoid')(decoded)

# Create the autoencoder

autoencoder = Model(input_layer, decoded)

# Compile the model

autoencoder.compile(optimizer='adam', loss='binary_crossentropy')

# Train the autoencoder

autoencoder.fit(x_train_noisy, x_train, epochs=10, batch_size=256, shuffle=True, validation_data=(x_test_noisy, x_test))Closing Thoughts

Autoencoders are versatile tools with a wide range of applications in machine learning and data analysis. By leveraging the power of deep learning, these networks can uncover intricate patterns in data, leading to valuable insights and enhanced decision-making capabilities. Whether you’re exploring complex datasets or seeking innovative solutions, autoencoders are a valuable addition to your toolkit.

Leave a Reply