Introduction:

In recent years, Generative AI has witnessed a paradigm shift with the introduction of transformer models. These models, characterized by their attention mechanisms, have revolutionized natural language processing (NLP) and other generative tasks. In this blog post, we’ll explore the transformer architecture, its applications in NLP, and its extension to other creative domains.

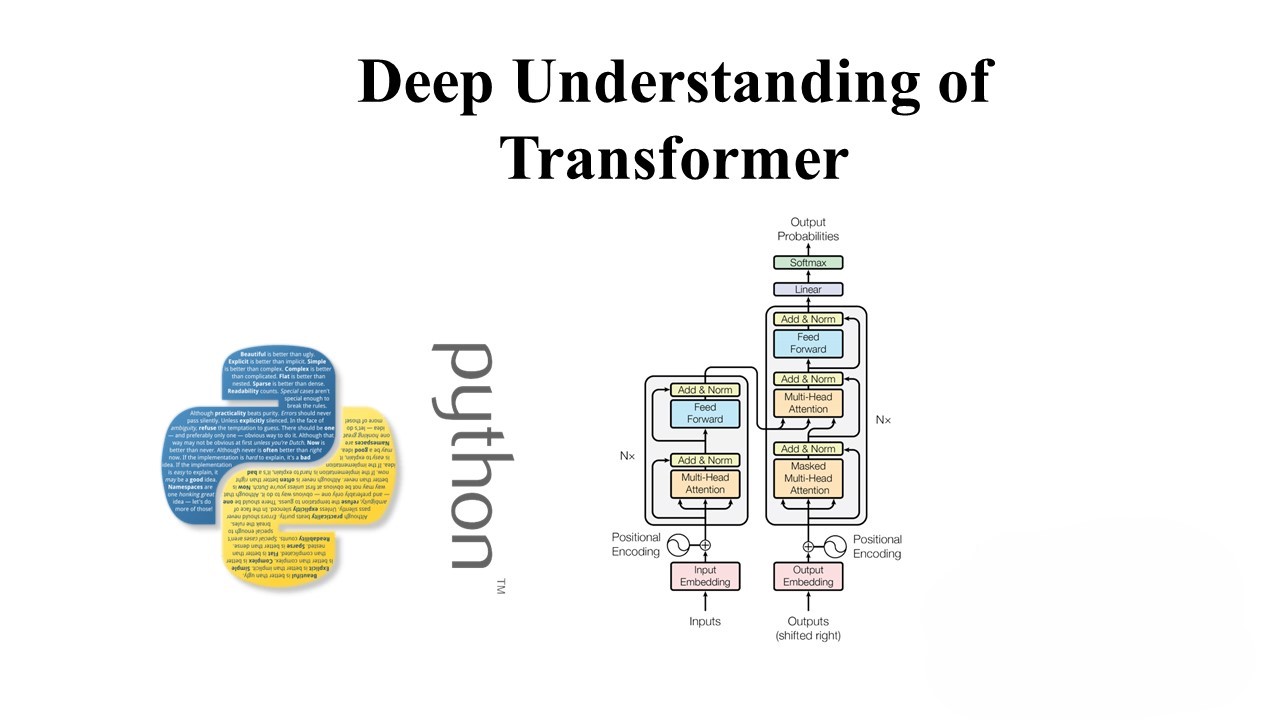

Understanding Transformers:

Transformers rely on self-attention mechanisms to weigh the importance of different input tokens when generating an output. This allows them to capture long-range dependencies more effectively than traditional sequential models. Here’s a basic illustration of a transformer’s self-attention mechanism:

import torch

import torch.nn as nn

# Define a simple self-attention layer

class SelfAttention(nn.Module):

def __init__(self, embed_size, heads):

super(SelfAttention, self).__init__()

self.embed_size = embed_size

self.heads = heads

self.head_dim = embed_size // heads

assert (

self.head_dim * heads == embed_size

), "Embedding size needs to be divisible by heads"

self.values = nn.Linear(self.head_dim, self.head_dim, bias=False)

self.keys = nn.Linear(self.head_dim, self.head_dim, bias=False)

self.queries = nn.Linear(self.head_dim, self.head_dim, bias=False)

self.fc_out = nn.Linear(heads * self.head_dim, embed_size)

def forward(self, values, keys, query, mask):

# Get number of training examples

N = query.shape[0]

value_len, key_len, query_len = values.shape[1], keys.shape[1], query.shape[1]

# Split the embedding into self.heads different pieces

values = values.reshape(N, value_len, self.heads, self.head_dim)

keys = keys.reshape(N, key_len, self.heads, self.head_dim)

queries = query.reshape(N, query_len, self.heads, self.head_dim)

values = self.values(values)

keys = self.keys(keys)

queries = self.queries(queries)

energy = torch.einsum("nqhd,nkhd->nhqk", [queries, keys])

if mask is not None:

energy = energy.masked_fill(mask == 0, float("-1e20"))

attention = torch.softmax(energy / (self.embed_size ** (1 / 2)), dim=3)

out = torch.einsum("nhql,nlhd->nqhd", [attention, values]).reshape(

N, query_len, self.heads * self.head_dim

)

out = self.fc_out(out)

return outApplications of Transformers in NLP:

Transformers have been instrumental in various NLP tasks, including text classification, sentiment analysis, and machine translation. Let’s take a look at how a pre-trained transformer model like BERT can be fine-tuned for sentiment analysis:

from transformers import BertTokenizer, BertForSequenceClassification, AdamW

import torch

# Load pre-trained BERT model and tokenizer

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

model = BertForSequenceClassification.from_pretrained('bert-base-uncased')

# Tokenize input text

text = "This movie is great!"

inputs = tokenizer(text, return_tensors='pt')

# Forward pass through the model

outputs = model(**inputs)

# Get the predicted class

logits = outputs.logits

predicted_class = torch.argmax(logits, dim=1).item()

print(f"Predicted sentiment class: {predicted_class}")Beyond NLP: Transformers in Generative Tasks:

Transformers have also been applied to image generation tasks. Variants like the Vision Transformer (ViT) have shown promising results in generating high-quality images. Here’s a basic example of using a pre-trained ViT model for image generation:

from transformers import ViTForImageGeneration, ViTFeatureExtractor

import torch

from PIL import Image

# Load pre-trained ViT model and feature extractor

model = ViTForImageGeneration.from_pretrained('google/vit-base-patch16-224-in21k')

feature_extractor = ViTFeatureExtractor.from_pretrained('google/vit-base-patch16-224-in21k')

# Load and preprocess image

image_path = 'path_to_image.jpg'

image = Image.open(image_path)

inputs = feature_extractor(images=image, return_tensors='pt')

# Generate image

outputs = model.generate(**inputs)

# Save generated image

output_image_path = 'output_image.jpg'

output_image = outputs[0].numpy()

output_image = Image.fromarray(output_image)

output_image.save(output_image_path)Challenges and Future Directions:

While transformers have shown remarkable success, they also face challenges such as scalability and efficiency. Researchers are exploring techniques like sparse attention mechanisms and efficient model architectures to address these challenges and unlock new possibilities in Generative AI.

Conclusion:

Transformers have emerged as a game-changer in Generative AI, enabling advancements in NLP, image generation, and beyond. As researchers continue to innovate, we can expect transformers to play an increasingly important role in shaping the future of AI.

Feel free to expand on each section with more details, examples, and illustrations to make the content more informative and engaging.

Leave a Reply