Category: Generative AI

-

Parameter-Efficient Fine-Tuning of Large Language Models with Hugging Face’s PEFT Library

Introduction: Large Language Models (LLMs) like GPT, T5, and BERT have shown remarkable performance in NLP tasks. However, fine-tuning these models on downstream tasks can be computationally expensive. Parameter-Efficient Fine-Tuning (PEFT) approaches aim to address this challenge by fine-tuning only a small number of parameters while freezing most of the pretrained model. In this blog…

-

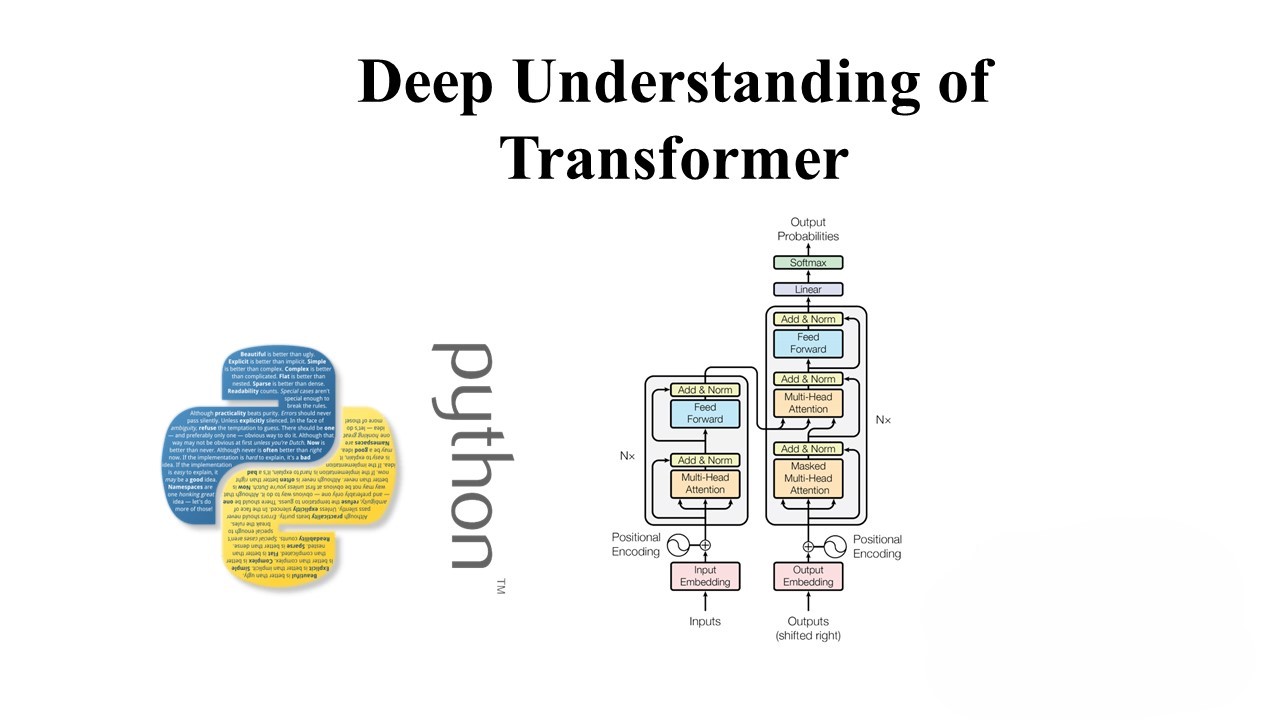

A Deep Dive into Transformers and its Function

Introduction: In recent years, Generative AI has witnessed a paradigm shift with the introduction of transformer models. These models, characterized by their attention mechanisms, have revolutionized natural language processing (NLP) and other generative tasks. In this blog post, we’ll explore the transformer architecture, its applications in NLP, and its extension to other creative domains. Understanding…