Category: Machine Learning

-

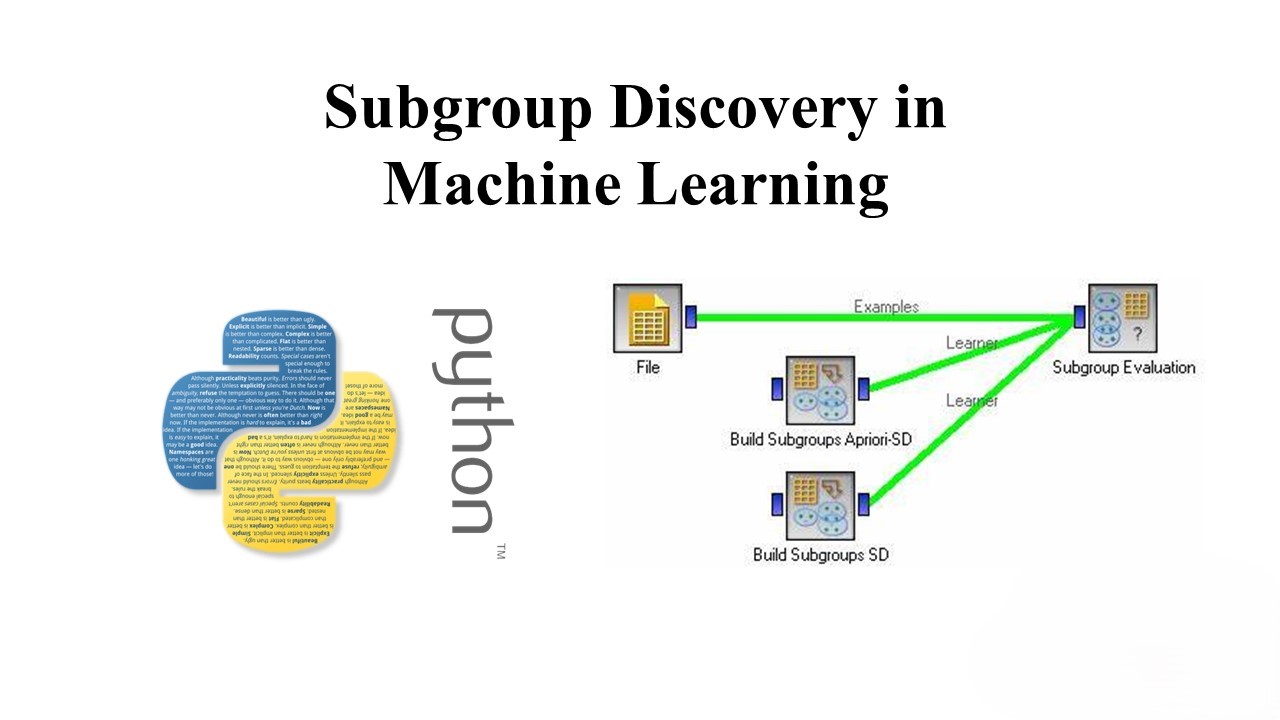

A Guide to Subgroup Discovery in Machine Learning

In the vast landscape of machine learning, uncovering hidden patterns in data is often the key to unlocking valuable insights. One powerful technique for achieving this is subgroup discovery, a method that focuses on identifying subsets of data that exhibit unique or interesting behavior. In this blog post, we’ll explore the concept of subgroup discovery…

-

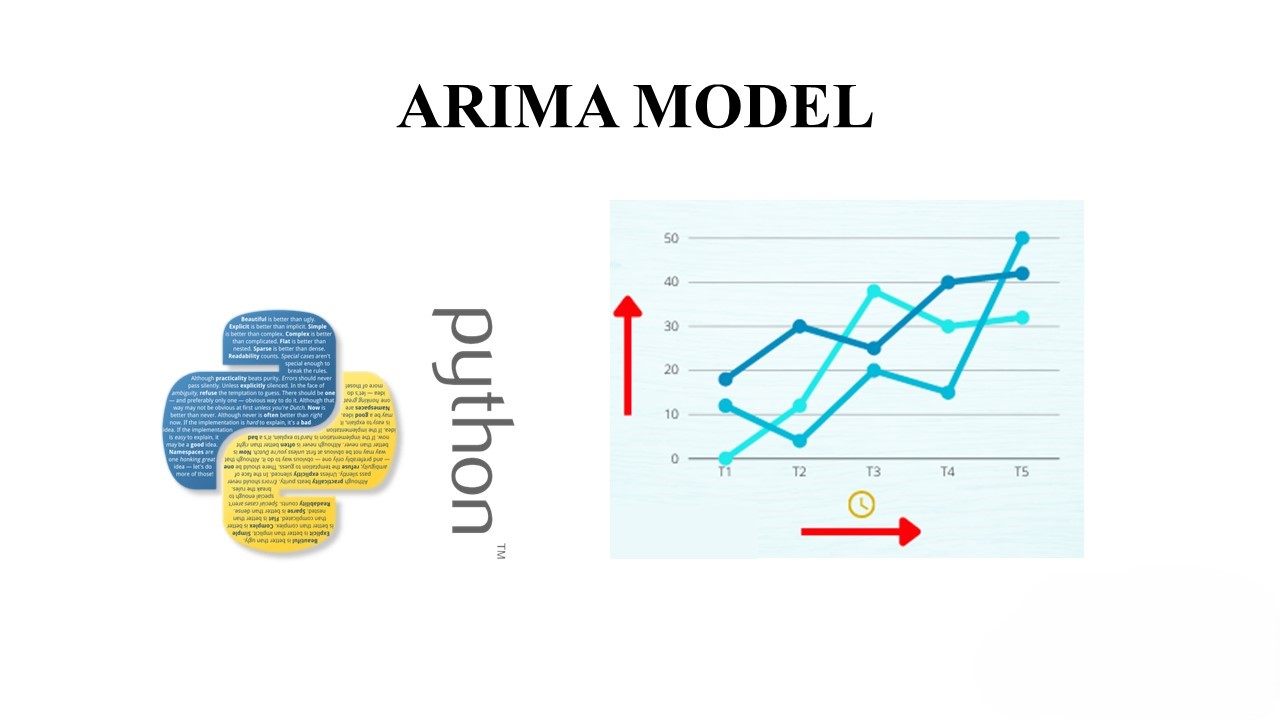

Exploring the Statistical Foundations of ARIMA Models

By Kishore Kumar K In the realm of time series analysis, ARIMA (AutoRegressive Integrated Moving Average) models stand out as a powerful tool for forecasting. Understanding the statistical concepts behind ARIMA can greatly enhance your ability to leverage this model effectively. AutoRegressive (AR) Component: The AR part of ARIMA signifies that the evolving variable of…

-

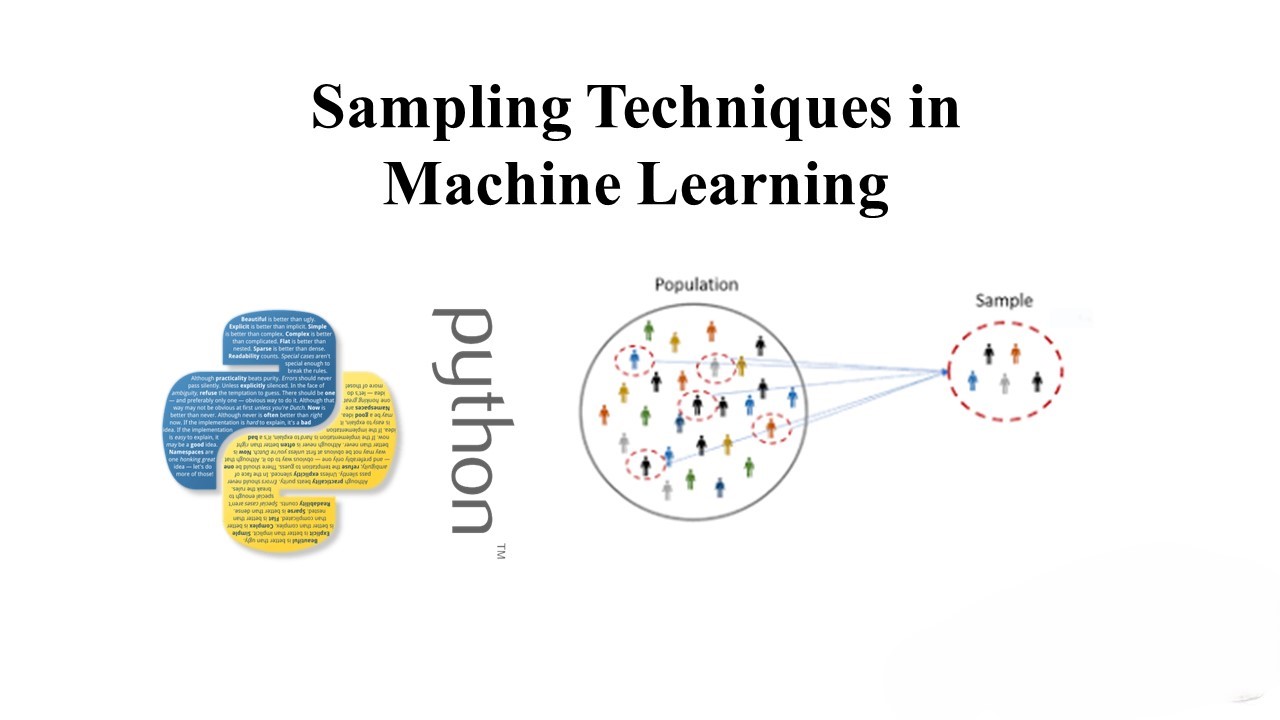

A Visual Guide To Sampling Techniques in Machine Learning

When working with large datasets, it’s often impractical to train machine learning models on the entire dataset. Instead, we opt to work with smaller, representative samples. However, the way we sample can significantly impact the performance and accuracy of our models. Let’s explore some commonly used sampling techniques: 🔹 Simple Random Sampling: Each data point…

-

Unlocking Anomaly Detection: Exploring Isolation Forests

In the vast landscape of machine learning, anomaly detection stands out as a critical application with wide-ranging implications. One powerful tool in this domain is the Isolation Forest algorithm, known for its efficiency and effectiveness in identifying outliers in data. Let’s delve into the fascinating world of Isolation Forests and their role in anomaly detection.…

-

The Mathematics Behind Machine Learning

Machine learning is a branch of artificial intelligence that enables computers to learn from data and make decisions or predictions without being explicitly programmed. At the core of machine learning algorithms lie mathematical concepts and principles that drive their functionality. In this blog post, we’ll explore some key mathematical concepts behind machine learning. Linear Algebra…

-

Data Preparation for Machine Learning

Data preparation is a crucial step in the machine learning pipeline. It involves cleaning, transforming, and organizing data to make it suitable for machine learning models. Proper data preparation ensures that the models can learn effectively from the data and make accurate predictions. Why is Data Preparation Important? Data preparation is essential for several reasons:…

-

Composite Estimators using Pipeline & FeatureUnions

In machine learning workflows, data often requires various preprocessing steps before it can be fed into a model. Composite estimators, such as Pipelines and FeatureUnions, provide a way to combine these preprocessing steps with the model training process. This blog post will explore the concepts of composite estimators and demonstrate their usage in scikit-learn (version…

-

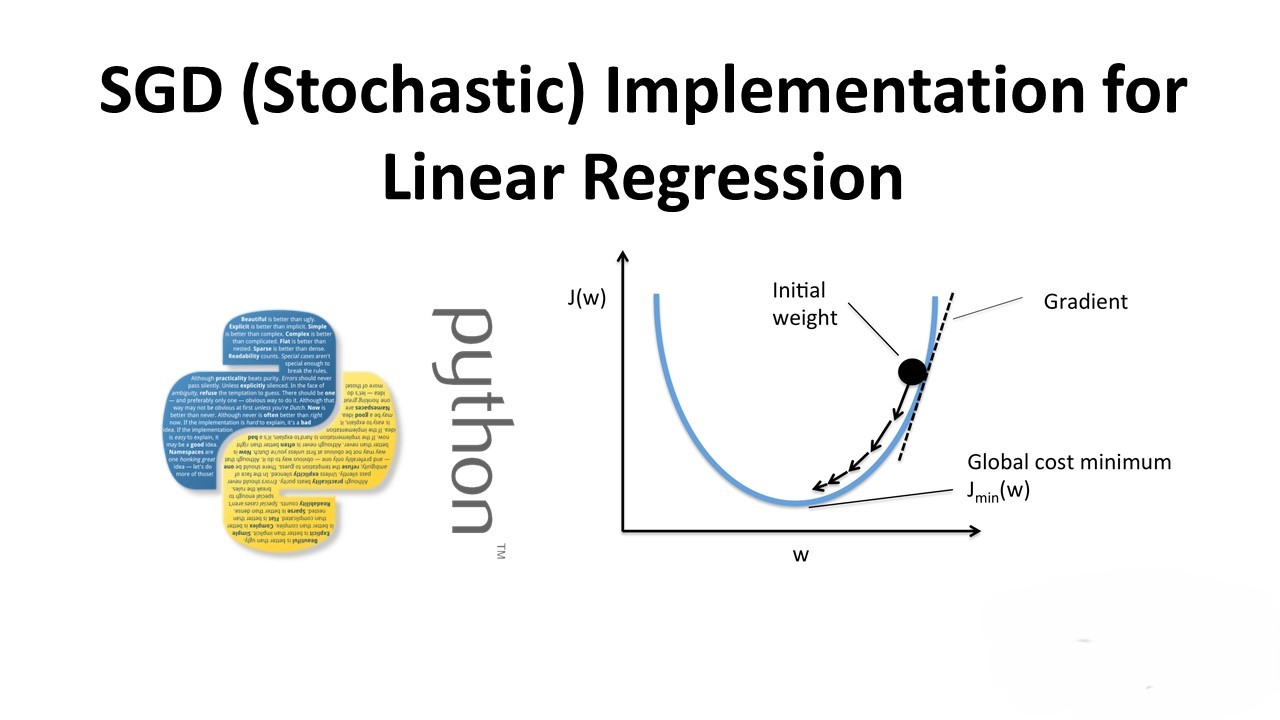

Custom SGD (Stochastic) Implementation for Linear Regression on Boston House Dataset

In this post, we’ll explore the implementation of Stochastic Gradient Descent (SGD) for Linear Regression on the Boston House dataset. We’ll compare our custom implementation with the SGD implementation provided by the popular machine learning library, scikit-learn. Importing Libraries Data Loading and Preprocessing We load the Boston House dataset, standardize the data, and split it…

-

Understanding Decision Trees: A Comprehensive Guide with Python Implementation

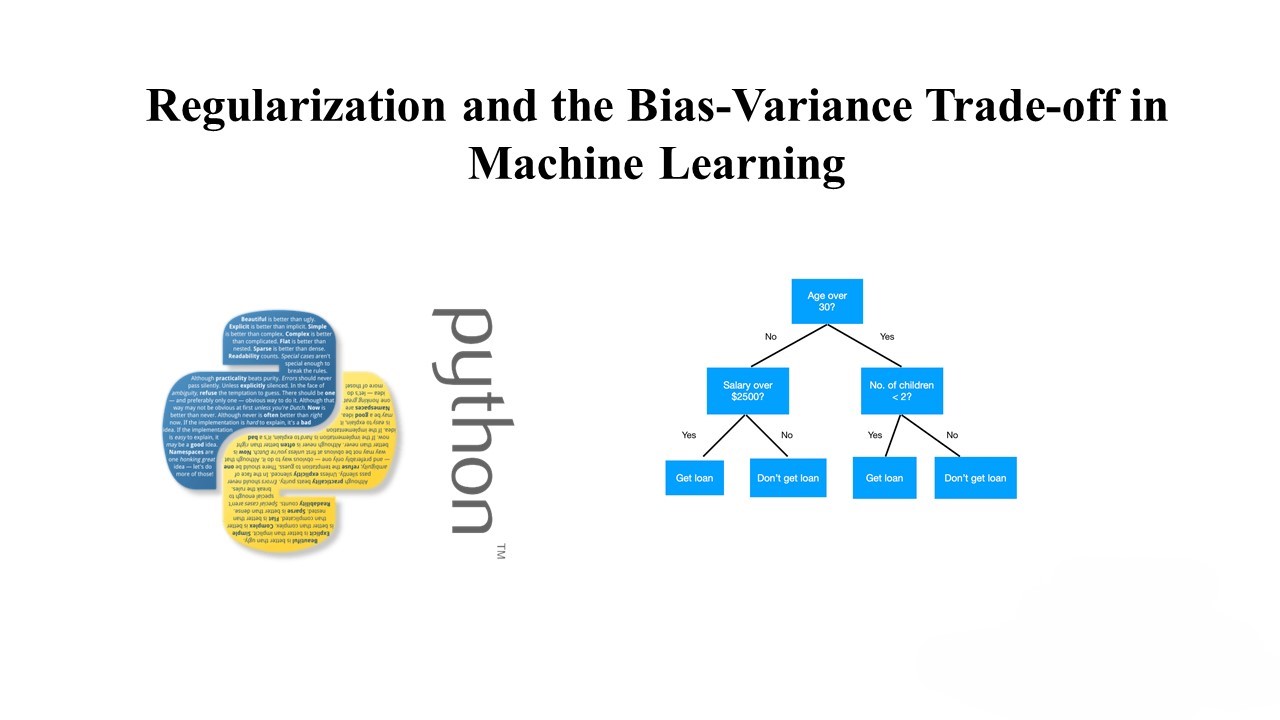

Introduction: Decision trees are powerful tools in the field of machine learning and data science. They are versatile, easy to interpret, and can handle both classification and regression tasks. In this blog post, we will explore decision trees in detail, understand how they work, and implement a decision tree classifier using Python. What is a…

-

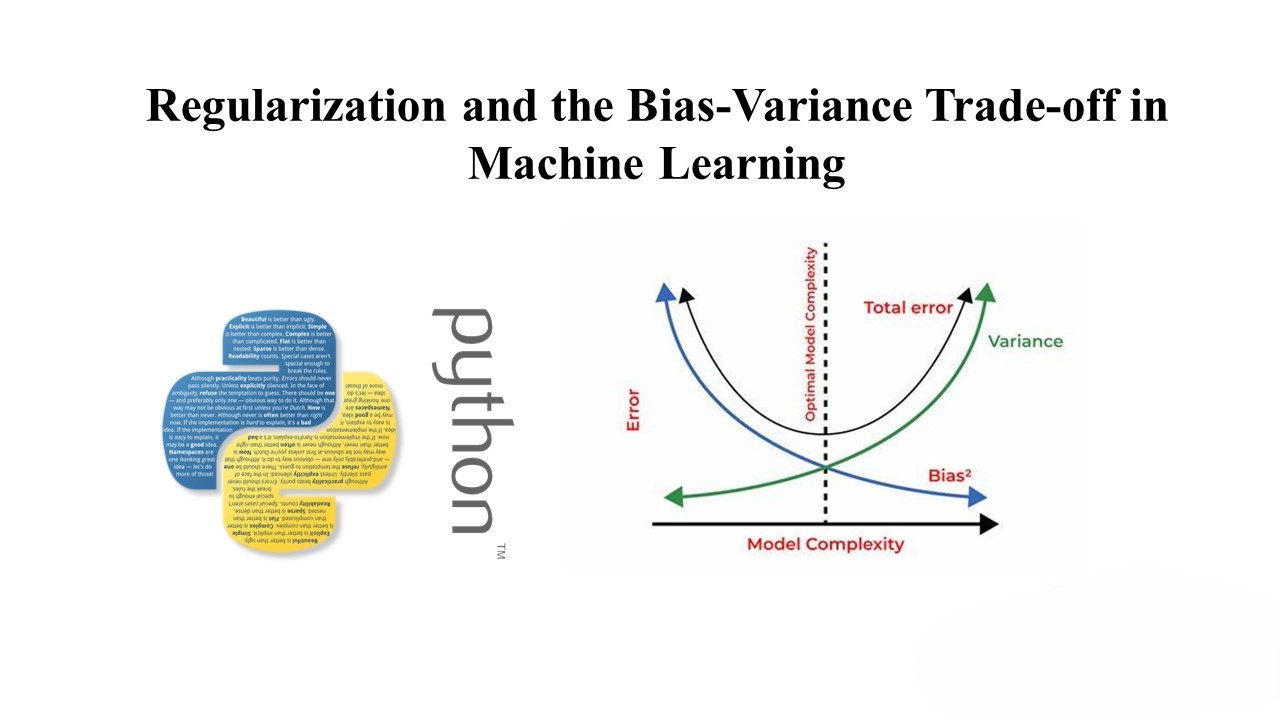

Regularization and the Bias-Variance Trade-off in Machine Learning

Overfitting is a common issue in machine learning models, where a model fits the training data too closely, leading to poor generalization on new data. Regularization is a technique used to prevent overfitting by adding a penalty term to the model’s loss function. This penalty encourages simpler models and helps strike a balance between bias…