Transfer learning is a powerful technique in the field of deep learning, especially in computer vision, where it allows us to leverage pre-trained models to solve new tasks with limited data. In this blog post, we’ll explore transfer learning in the context of computer vision and demonstrate how it can be implemented using Python and popular deep learning libraries like TensorFlow and Keras.

What is Transfer Learning?

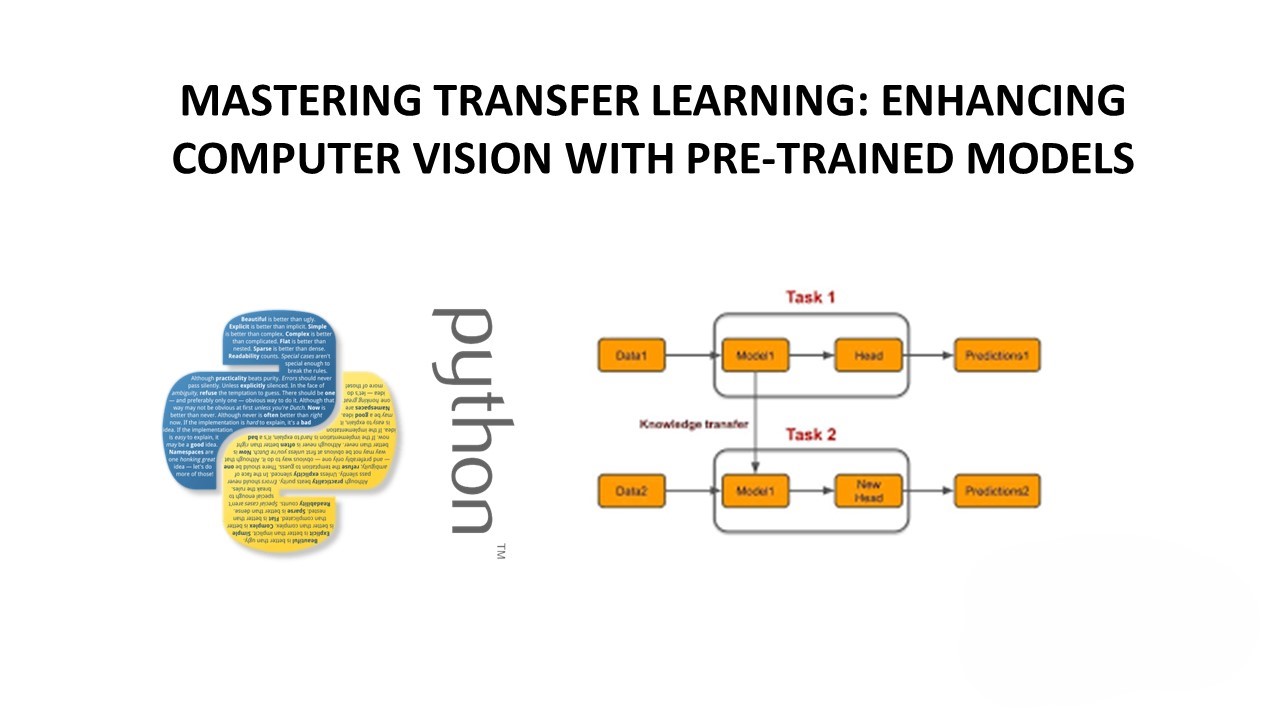

Transfer learning involves taking a pre-trained neural network model and fine-tuning it on a new dataset or task. The idea is to use the knowledge learned by the model on a large, general dataset (such as ImageNet) and transfer it to a new, specific dataset or task. This can significantly reduce the amount of training data required and improve the performance of the model, especially when the new dataset is small.

Using Transfer Learning in Practice

To demonstrate transfer learning, let’s consider a common scenario: classifying images of cats and dogs. We’ll use a pre-trained convolutional neural network (CNN) like VGG16, which has been trained on the ImageNet dataset. We’ll fine-tune this model on a smaller dataset of cat and dog images to classify new images into these categories.

Implementation

First, we’ll import the necessary libraries:

import tensorflow as tf

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from tensorflow.keras.applications import VGG16

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Flatten, DropoutNext, we’ll load the pre-trained VGG16 model without the top (fully connected) layers, since we’ll be adding our own for the new task:

base_model = VGG16(weights='imagenet', include_top=False, input_shape=(224, 224, 3))We’ll then freeze the weights of the base model so that they are not updated during training:

for layer in base_model.layers:

layer.trainable = FalseNext, we’ll add our own classification layers on top of the base model:

model = Sequential([

base_model,

Flatten(),

Dense(256, activation='relu'),

Dropout(0.5),

Dense(1, activation='sigmoid')

])We’ll compile the model and then train it on our dataset of cat and dog images:

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

train_datagen = ImageDataGenerator(rescale=1./255, shear_range=0.2, zoom_range=0.2, horizontal_flip=True)

train_generator = train_datagen.flow_from_directory('path_to_training_data', target_size=(224, 224), batch_size=32, class_mode='binary')

model.fit(train_generator, epochs=5, steps_per_epoch=len(train_generator), verbose=1)Conclusion

Transfer learning is a valuable technique in deep learning, particularly in computer vision, where it can help us achieve good results even with limited data. By leveraging pre-trained models and fine-tuning them on new tasks, we can save time and resources while building highly effective models for a wide range of applications.

Leave a Reply