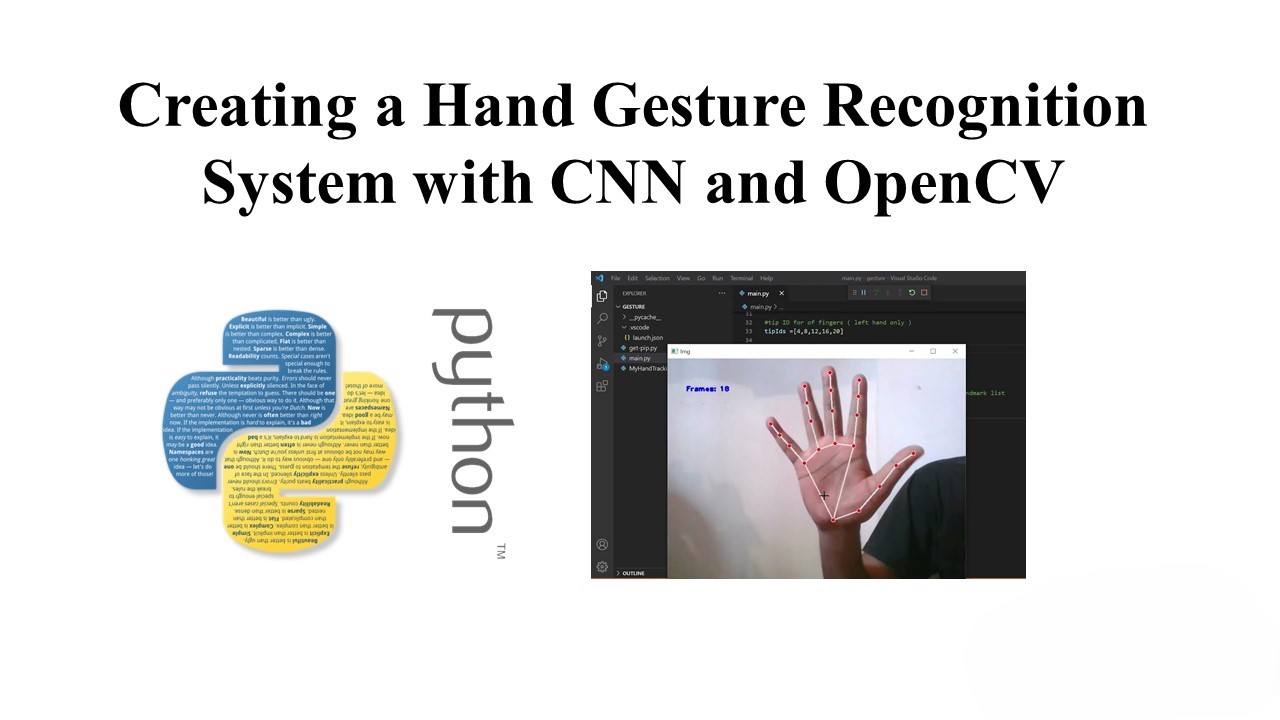

Welcome back to the second part of our Hand Gesture Recognition project. In this segment, we will integrate the trained Convolutional Neural Network (CNN) with the OpenCV library to create a real-time hand gesture recognition system. Let’s dive in!

Setting Up the Environment

Before we begin, ensure you have the required libraries installed. You can use the following commands to install them:

pip install opencv-python

pip install tensorflow

pip install matplotlib

Initializing the Environment

Let’s start by initializing our environment and setting up the necessary components. This includes importing the required libraries, setting up the video capture, and defining initial parameters.

# Import necessary libraries

import cv2

import sys

import numpy as np

from tensorflow.keras.models import load_model

# Load the pre-trained hand gesture recognition model

hand_model = load_model("hand_model_gray.hdf5", compile=False)

# Define classes for gesture labels

classes = {0: 'fist', 1: 'five', 2: 'point', 3: 'swing'}

# Set up video capture

video = cv2.VideoCapture(0)

# Check if the video capture is successful

if not video.isOpened():

print("Could not open video")

sys.exit()

# Read the first frame from the video

ok, frame = video.read()

if not ok:

print("Cannot read video")

sys.exit()

# Use the first frame as the initial background frame

bg = frame.copy()

# Define a kernel for erosion and dilation of masks

kernel = np.ones((3, 3), np.uint8)

# Define display positions (pixel coordinates)

positions = {'hand_pose': (15, 40), 'fps': (15, 20), 'null_pos': (200, 200)}

# Tracking parameters

bbox_initial = (116, 116, 170, 170) # Starting position for bounding box

bbox = bbox_initial

tracking = -1

Real-Time Video Processing

Now, let’s enter the main loop where we capture, process, and display the video frames in real-time. We’ll perform background subtraction, hand tracking, and hand gesture recognition.

while True:

# Read a new frame from the video

ok, frame = video.read()

display = frame.copy()

data_display = np.zeros_like(display, dtype=np.uint8) # Black screen to display data

if not ok:

break

# Start timer

timer = cv2.getTickCount()

# Processing

# Background subtraction

diff = cv2.absdiff(bg, frame)

mask = cv2.cvtColor(diff, cv2.COLOR_BGR2GRAY)

th, thresh = cv2.threshold(mask, 10, 255, cv2.THRESH_BINARY)

opening = cv2.morphologyEx(thresh, cv2.MORPH_OPEN, kernel)

closing = cv2.morphologyEx(opening, cv2.MORPH_CLOSE, kernel)

img_dilation = cv2.dilate(closing, kernel, iterations=2)

imask = img_dilation > 0

foreground = mask_array(frame, imask)

foreground_display = foreground.copy()

# Hand tracking

if tracking != -1:

tracking, bbox = tracker.update(foreground)

tracking = int(tracking)

# Hand gesture recognition

hand_crop = img_dilation[int(bbox[1]):int(bbox[1] + bbox[3]), int(bbox[0]):int(bbox[0] + bbox[2])]

try:

# Resize cropped hand and make prediction on gesture

hand_crop_resized = np.expand_dims(cv2.resize(hand_crop, (54, 54)), axis=0).reshape((1, 54, 54, 1))

prediction = hand_model.predict(hand_crop_resized)

predi = prediction[0].argmax()

gesture = classes[predi]

# Display prediction results

for i, pred in enumerate(prediction[0]):

barx = positions['hand_pose'][0]

bary = 60 + i * 60

bar_length = int(400 * pred) + barx

colour = (0, 255, 0) if i == predi else (0, 0, 255)

cv2.putText(data_display, "{}: {}".format(classes[i], pred), (positions['hand_pose'][0], 30 + i * 60),

cv2.FONT_HERSHEY_SIMPLEX, 0.75, (255, 255, 255), 2)

cv2.rectangle(data_display, (barx, bary), (bar_length, bary - 20), colour, -1, 1)

cv2.putText(display, "hand pose: {}".format(gesture), positions['hand_pose'], cv2.FONT_HERSHEY_SIMPLEX, 0.75,

(0, 0, 255), 2)

cv2.putText(foreground_display, "hand pose: {}".format(gesture), positions['hand_pose'],

cv2.FONT_HERSHEY_SIMPLEX, 0.75, (0, 0, 255), 2)

except Exception as ex:

cv2.putText(display, "hand pose: error", positions['hand_pose'], cv2.FONT_HERSHEY_SIMPLEX, 0.75, (0, 0, 255),

2)

cv2.putText(foreground_display, "hand pose: error", positions['hand_pose'], cv2.FONT_HERSHEY_SIMPLEX, 0.75,

(0, 0, 255), 2)

# Draw bounding box

p1 = (int(bbox[0]), int(bbox[1]))

p2 = (int(bbox[0] + bbox[2]), int(bbox[1] + bbox[3]))

cv2.rectangle(foreground_display, p1, p2, (255, 0, 0), 2, 1)

cv2.rectangle(display, p1, p2, (255, 0, 0), 2, 1)

# Move the mouse

hand_pos = ((p1[0] + p2[0]) // 2, (p1[1] + p2[1]) // 2)

mouse_change = ((p1[0] + p2[0]) // 2 - positions['null_pos'][0], positions['null_pos'][0] - (p1[1] + p2[1]) // 2)

cv2.circle(display, positions['null_pos'], 5, (0, 0, 255), -1)

cv2.circle(display, hand_pos, 5, (0, 255, 0), -1)

cv2.line(display, positions['null_pos'], hand_pos, (255, 0, 0), 5)

# Calculate Frames per second (FPS)

fps = cv2.getTickFrequency() / (cv2.getTickCount() - timer)

cv2.putText(foreground_display, "FPS : " + str(int(fps)), positions['fps'], cv2.FONT_HERSHEY_SIMPLEX, 0.65,

(50, 170, 50), 2)

cv2.putText(display, "FPS : " + str(int(fps)), positions['fps'], cv2.FONT_HERSHEY_SIMPLEX, 0.65, (50, 170, 50), 2)

# Display result

cv2.imshow("display", display)

cv2.imshow("data", data_display)

cv2.imshow("diff", diff)

cv2.imshow("thresh", thresh)

cv2.imshow("img_dilation", img_dilation)

try:

cv2.imshow("hand_crop", hand_crop)

except:

pass

cv2.imshow("foreground_display", foreground_display)

k = cv2.waitKey(1) & 0xff

if k == 27:

break # ESC pressed

elif k == 114 or k == 108:

bg = frame.copy()

bbox = bbox_initial

tracking = -1

elif k == 116:

tracker = setup_tracker(2)

tracking = tracker.init(frame, bbox)

elif k == 115:

fname = os.path.join("data", CURR_POS, "{}_{}.jpg".format(CURR_POS, get_unique_name(

os.path.join("data", CURR_POS))))

cv2.imwrite(fname, hand_crop)

elif k != 255:

print(k)

cv2.destroyAllWindows()

video.release()

Conclusion

Congratulations! You’ve now completed the implementation of a real-time hand gesture recognition system. This project combines the power of Convolutional Neural Networks for image classification with OpenCV for video processing and tracking.

Feel free to explore and enhance the system further, perhaps by adding more gestures or refining the tracking mechanism. This project opens the door to a wide range of applications, from gesture-controlled interfaces to interactive experiences.

Thank you for joining us in this two-part blog series. We hope you enjoyed building this hand gesture recognition system!

Leave a Reply