Tag: #AI

-

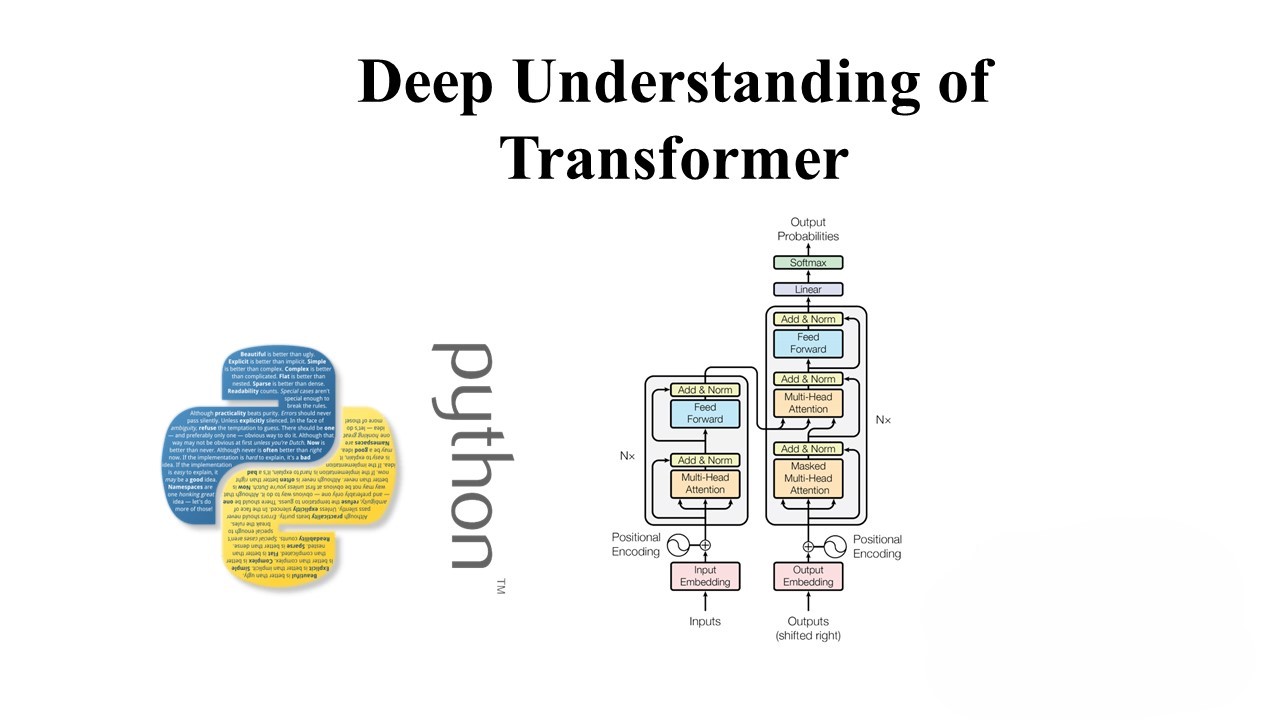

A Deep Dive into Transformers and its Function

Introduction: In recent years, Generative AI has witnessed a paradigm shift with the introduction of transformer models. These models, characterized by their attention mechanisms, have revolutionized natural language processing (NLP) and other generative tasks. In this blog post, we’ll explore the transformer architecture, its applications in NLP, and its extension to other creative domains. Understanding…

-

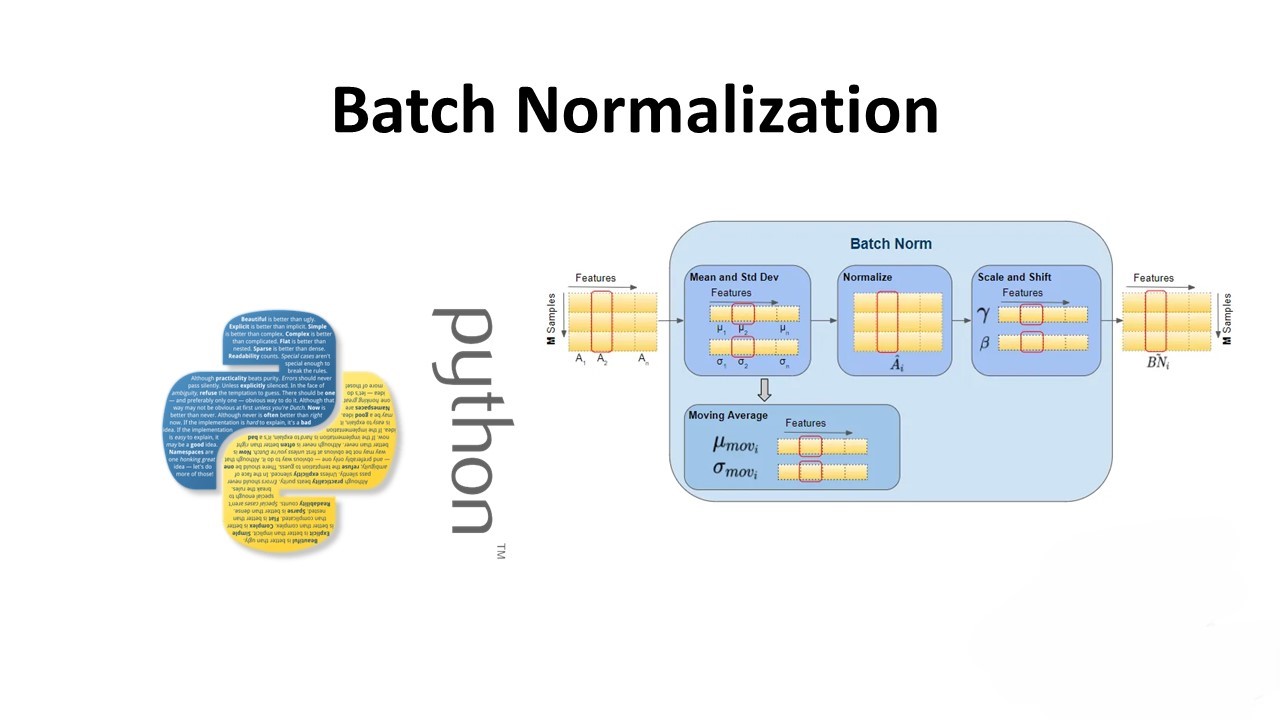

Optimizing Deep Learning: A Comprehensive Guide to Batch Normalization

Batch Normalization (BN) is a technique used in deep learning to improve the training of deep neural networks by reducing the internal covariate shift problem. This problem occurs when the distribution of the inputs to each layer of the network changes during training, making it difficult to train the network effectively. BN addresses this issue…