Tag: #NeuralNetworks

-

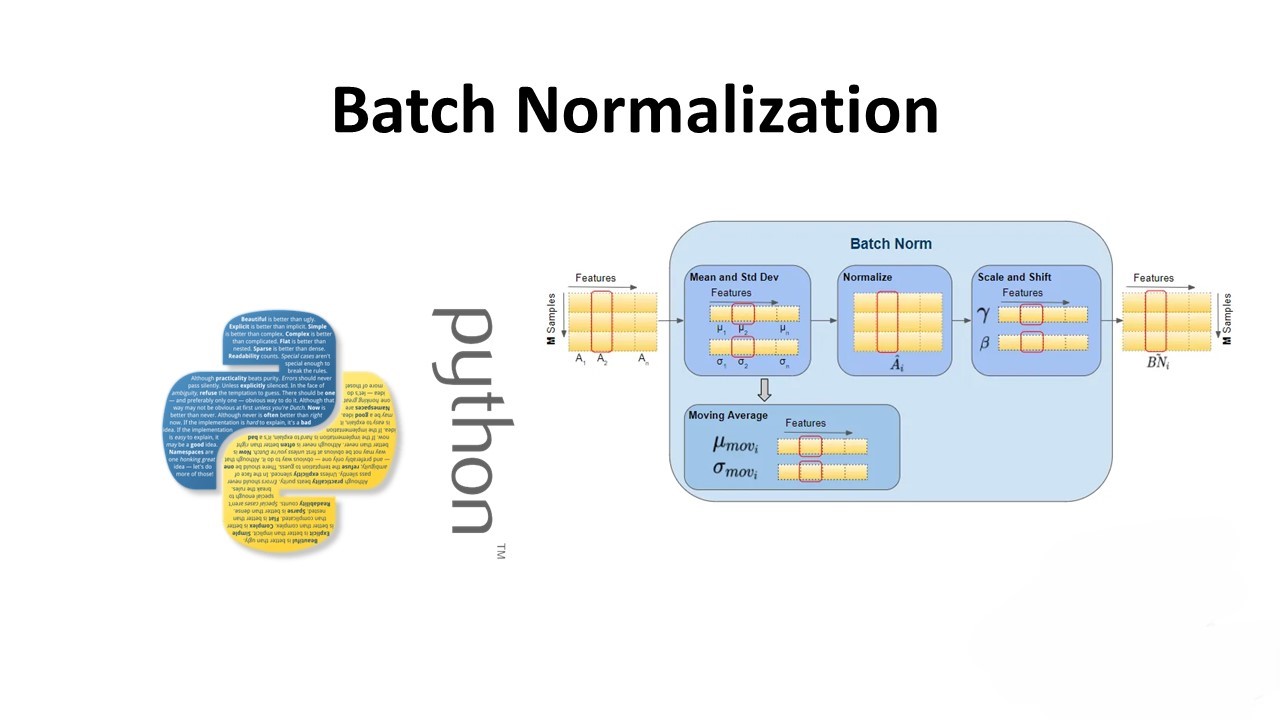

Optimizing Deep Learning: A Comprehensive Guide to Batch Normalization

Batch Normalization (BN) is a technique used in deep learning to improve the training of deep neural networks by reducing the internal covariate shift problem. This problem occurs when the distribution of the inputs to each layer of the network changes during training, making it difficult to train the network effectively. BN addresses this issue…

-

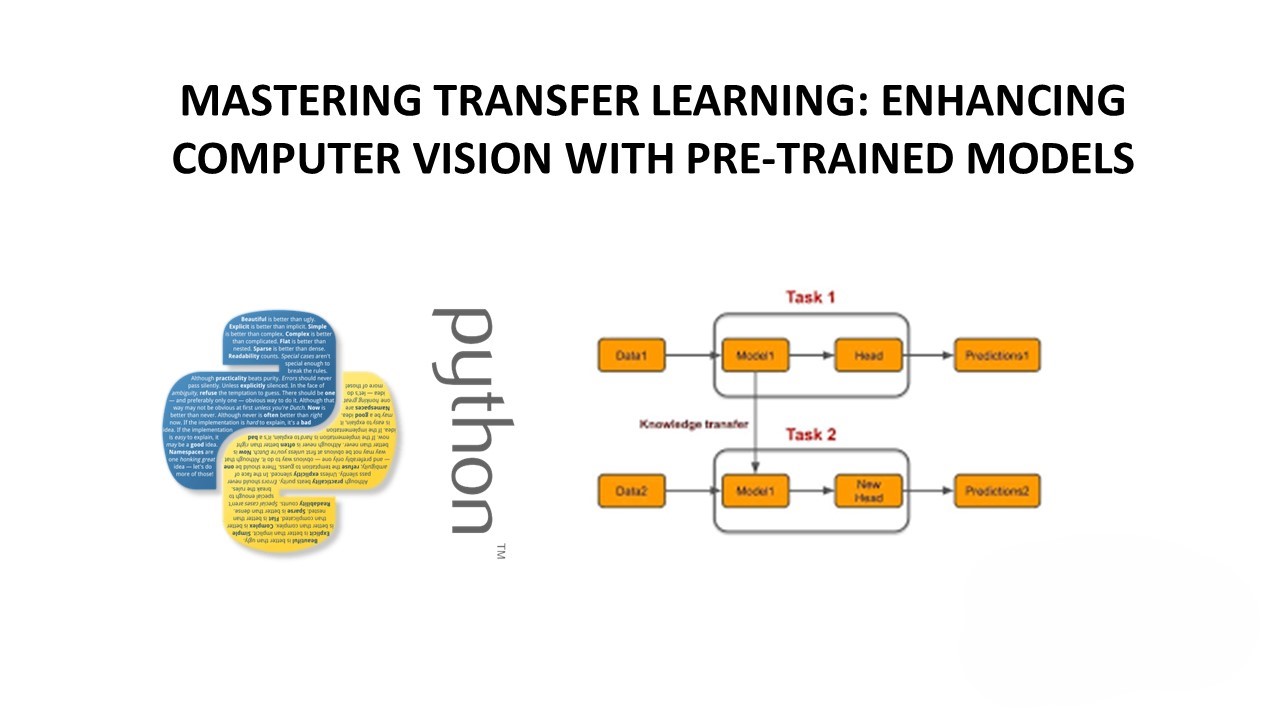

Mastering Transfer Learning: Enhancing Computer Vision with Pre-Trained Models

Transfer learning is a powerful technique in the field of deep learning, especially in computer vision, where it allows us to leverage pre-trained models to solve new tasks with limited data. In this blog post, we’ll explore transfer learning in the context of computer vision and demonstrate how it can be implemented using Python and…

-

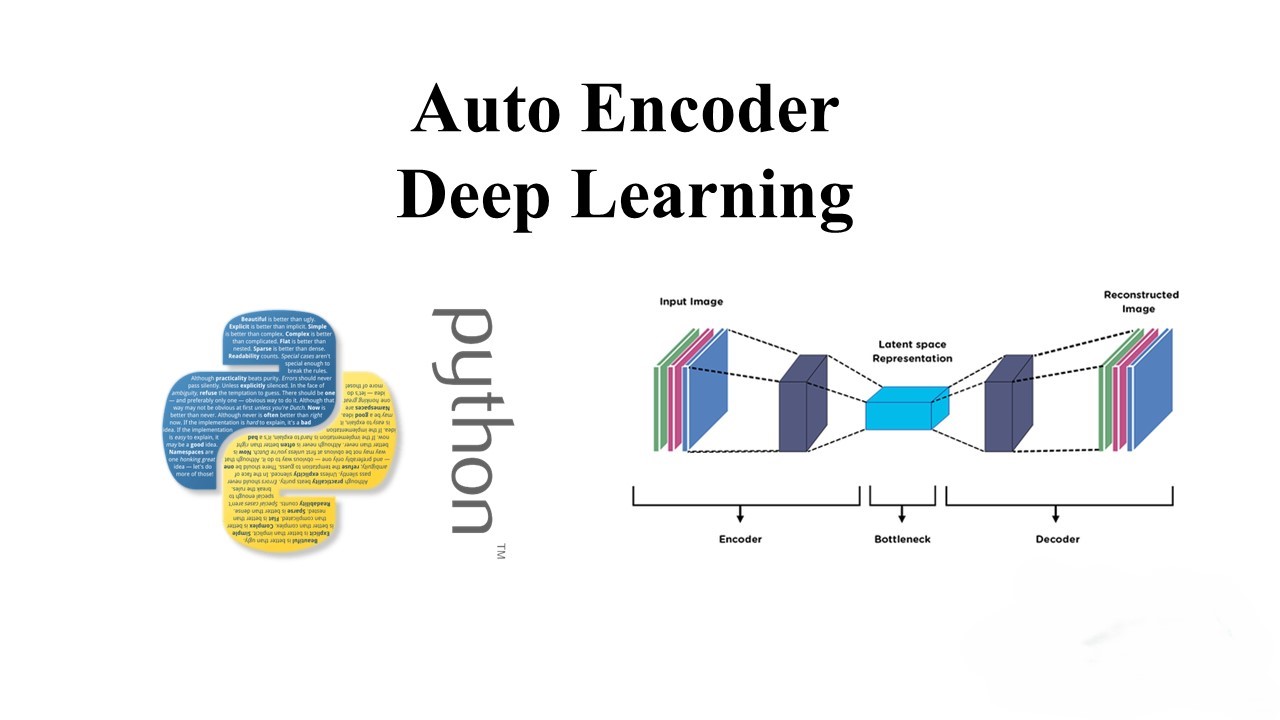

Unlocking the Potential of Autoencoders: A Deep Dive

In the realm of unsupervised learning, autoencoders stand out as powerful tools for data representation and feature learning. These neural networks are adept at capturing complex patterns in data, making them invaluable for tasks like dimensionality reduction, anomaly detection, and data denoising. Let’s delve into the inner workings of autoencoders and explore their practical applications.…

-

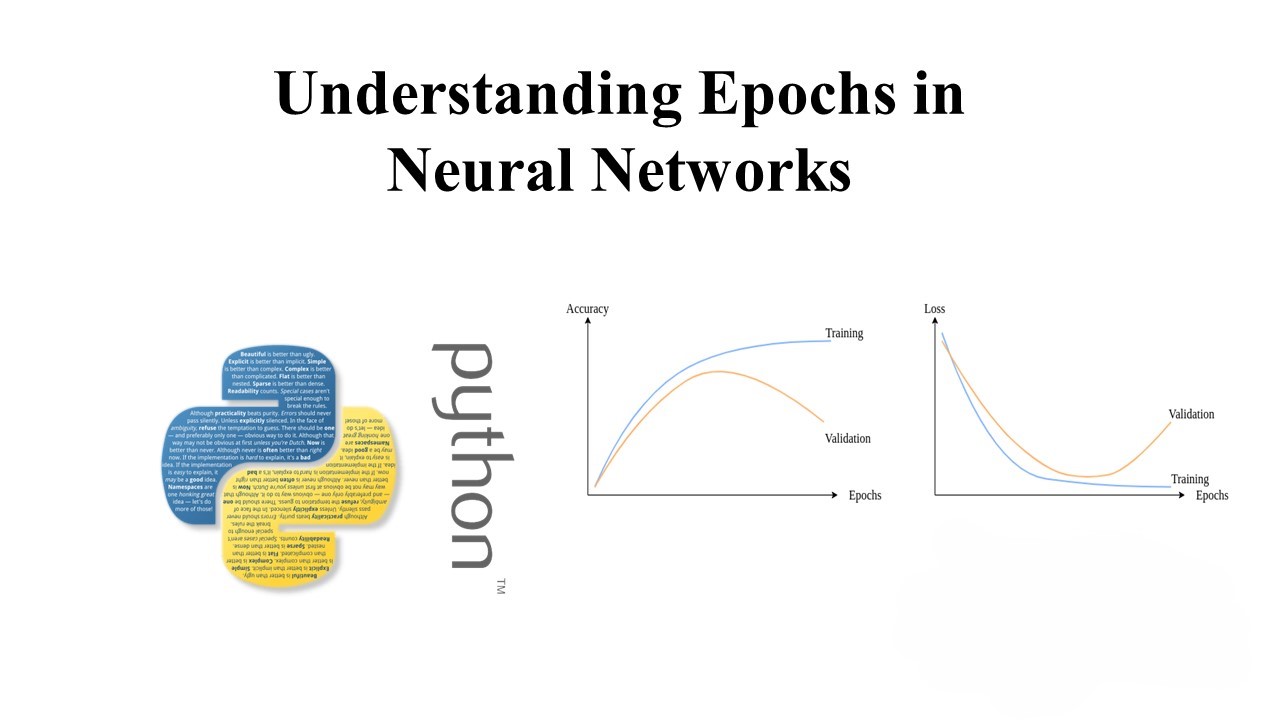

Understanding Epochs in Neural Networks: A Comprehensive Guide

In this tutorial, we’ll dive deep into the concept of epochs in neural networks. We’ll explore how the number of epochs impacts training convergence and how early stopping can be used to optimize model generalization. Neural Networks: A Brief Overview Neural networks are powerful supervised machine learning algorithms commonly used for solving classification or regression…