Tag: #NLP

-

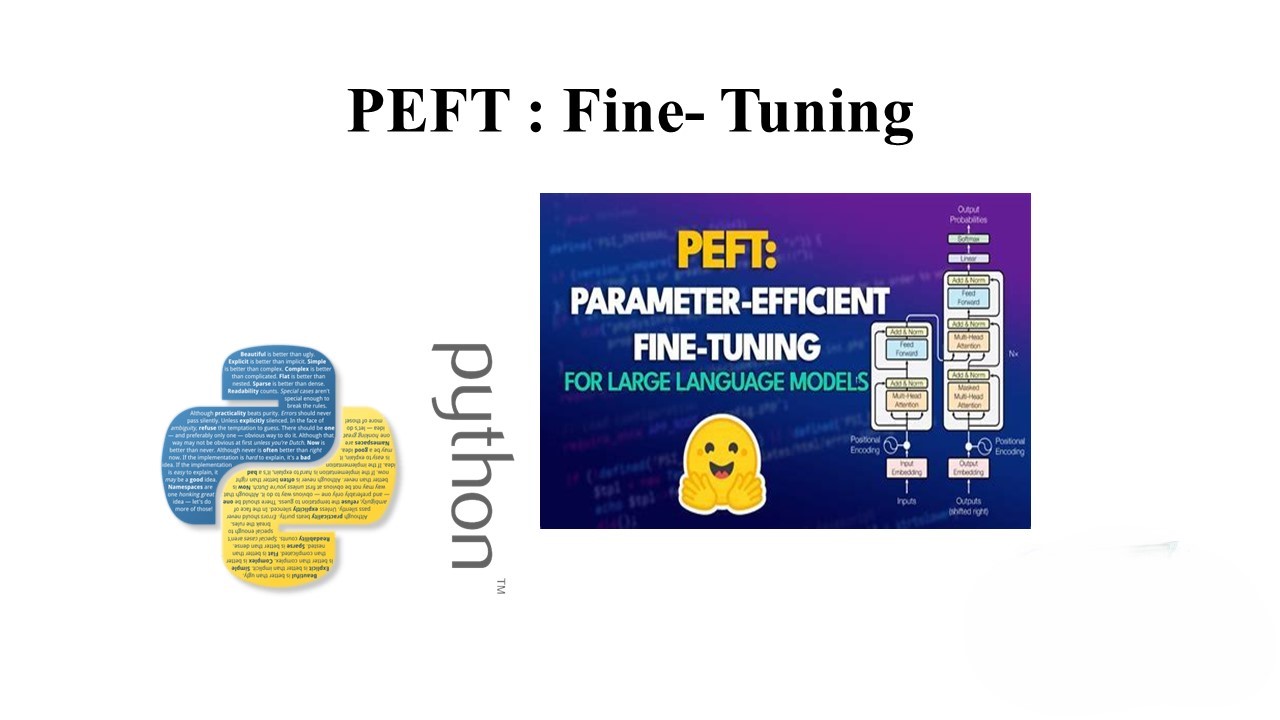

Parameter-Efficient Fine-Tuning of Large Language Models with Hugging Face’s PEFT Library

Introduction: Large Language Models (LLMs) like GPT, T5, and BERT have shown remarkable performance in NLP tasks. However, fine-tuning these models on downstream tasks can be computationally expensive. Parameter-Efficient Fine-Tuning (PEFT) approaches aim to address this challenge by fine-tuning only a small number of parameters while freezing most of the pretrained model. In this blog…

-

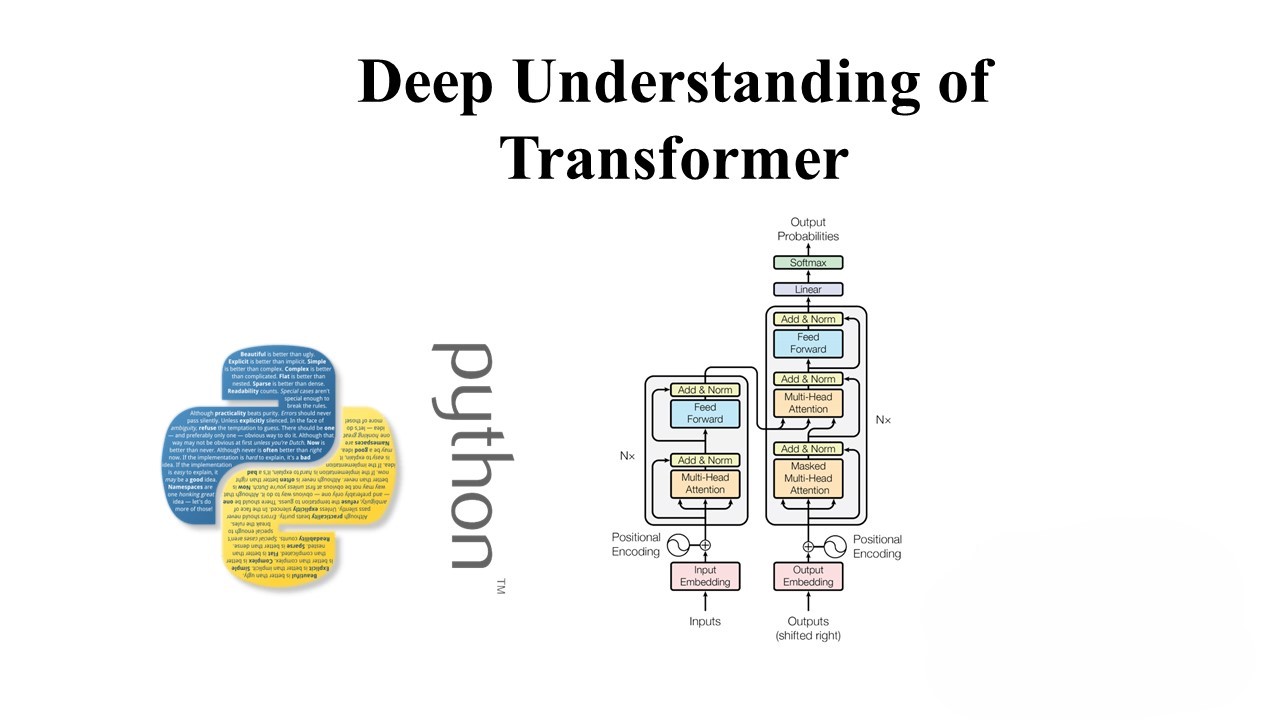

A Deep Dive into Transformers and its Function

Introduction: In recent years, Generative AI has witnessed a paradigm shift with the introduction of transformer models. These models, characterized by their attention mechanisms, have revolutionized natural language processing (NLP) and other generative tasks. In this blog post, we’ll explore the transformer architecture, its applications in NLP, and its extension to other creative domains. Understanding…

-

Sentiment Analysis: Unveiling the Power of Text Analysis

In the era of big data, understanding customer sentiment is crucial for businesses to make informed decisions. Sentiment analysis, also known as opinion mining, is a powerful technique that helps businesses extract valuable insights from text data. Whether it’s understanding customer feedback, monitoring social media chatter, or analyzing product reviews, sentiment analysis can provide invaluable…

-

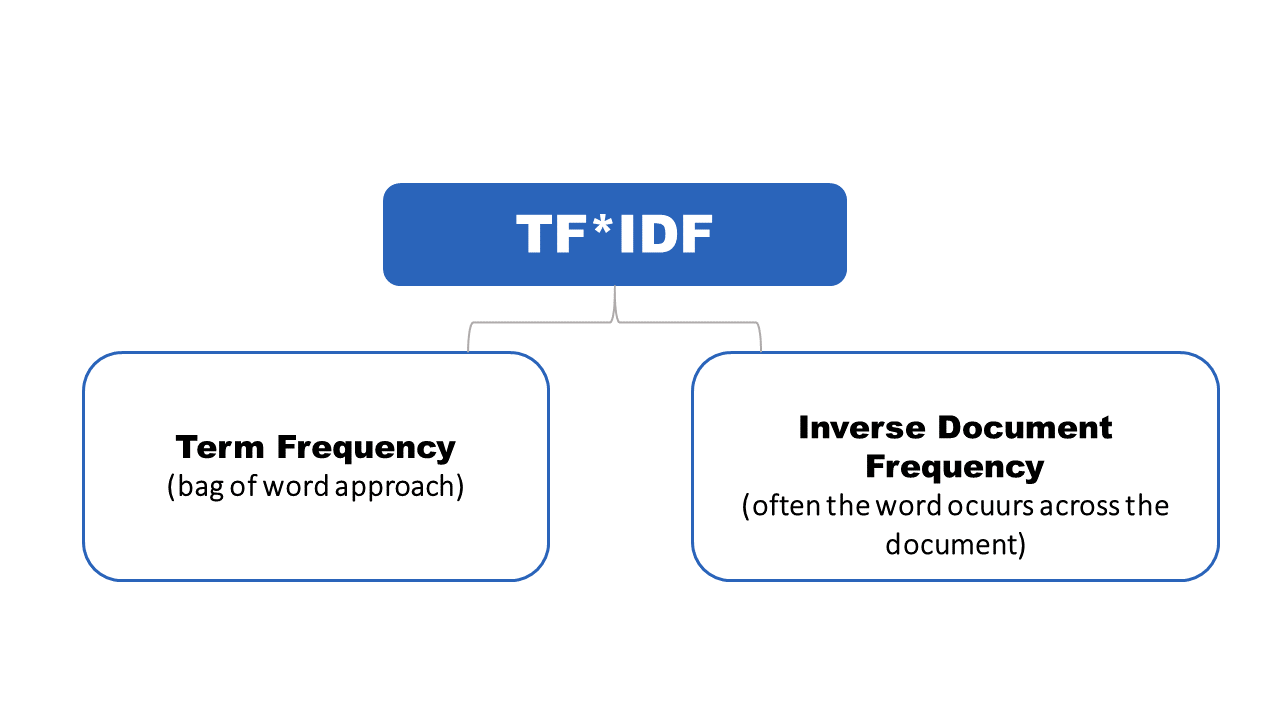

A Deep Dive into Text Classification with TF-IDF

Introduction: Unlocking the potential within textual data is a rewarding journey, and text classification, a cornerstone of Natural Language Processing (NLP), stands as a beacon in this exploration. In this blog post, we delve into the intricacies of text classification using Python, Pandas, NLTK, and scikit-learn. Our practical example revolves around travel and food-related sentences,…