Tag: #Regularization

-

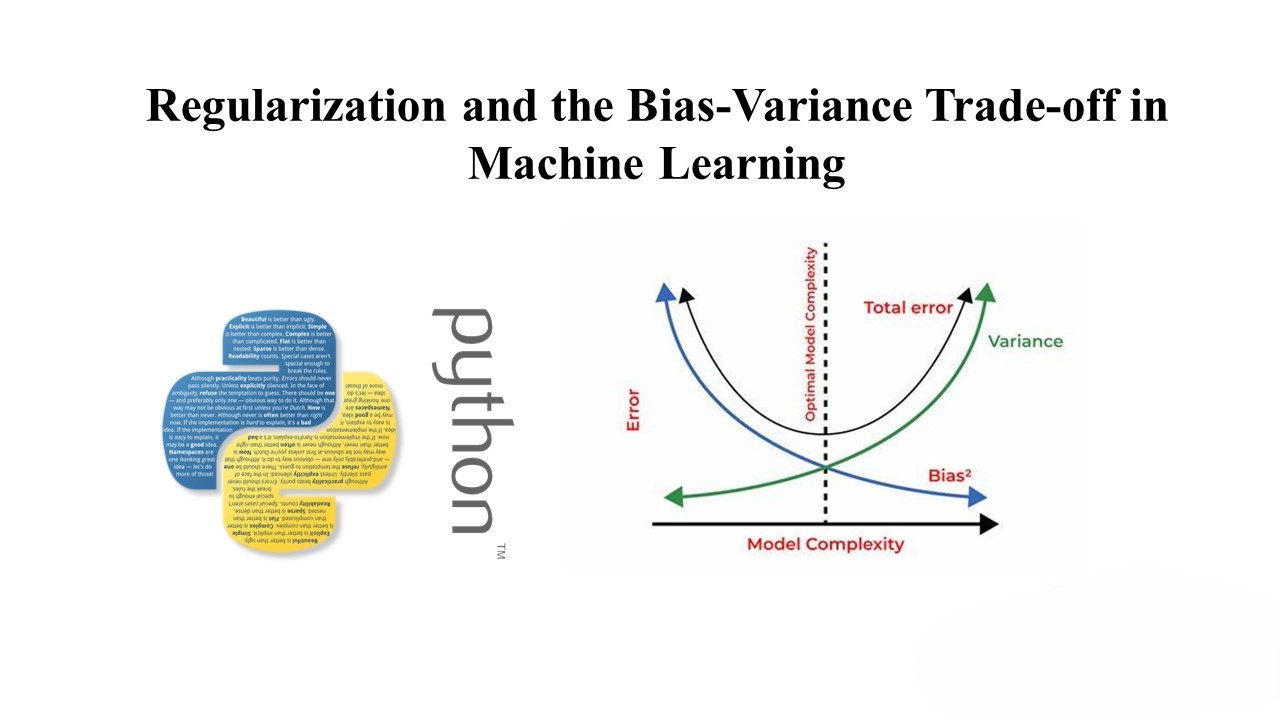

Regularization and the Bias-Variance Trade-off in Machine Learning

Overfitting is a common issue in machine learning models, where a model fits the training data too closely, leading to poor generalization on new data. Regularization is a technique used to prevent overfitting by adding a penalty term to the model’s loss function. This penalty encourages simpler models and helps strike a balance between bias…

-

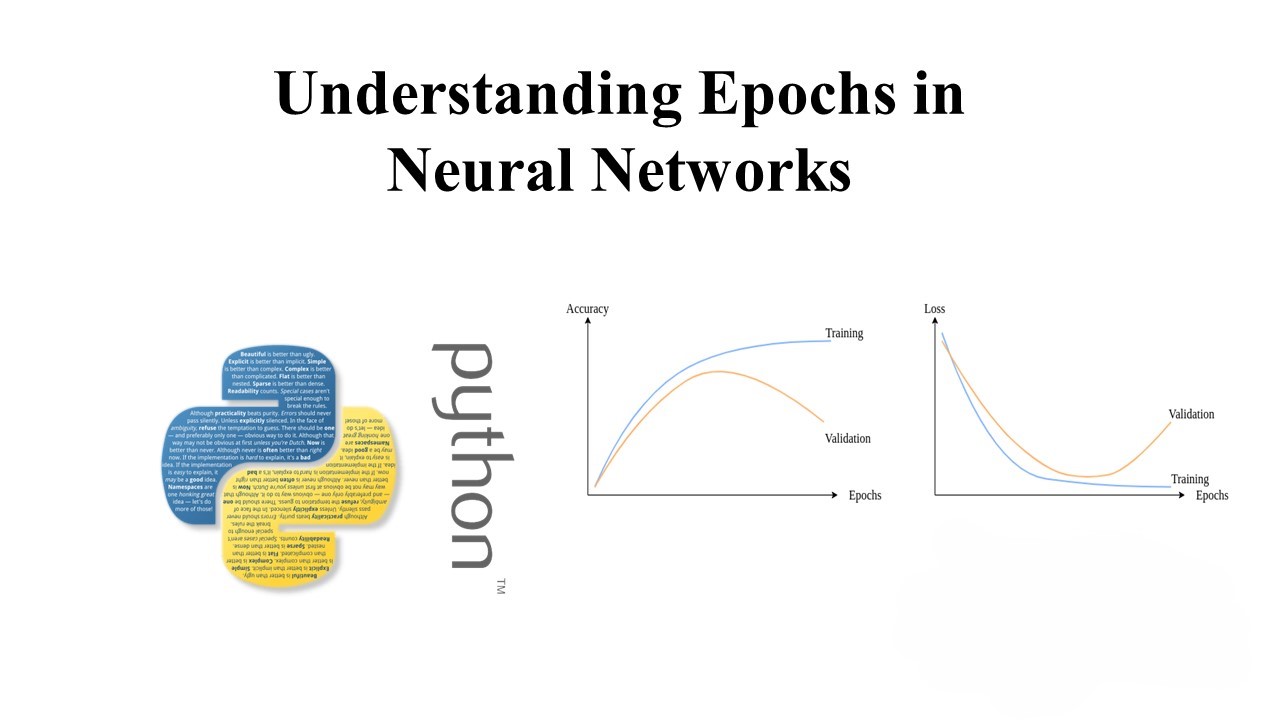

Understanding Epochs in Neural Networks: A Comprehensive Guide

In this tutorial, we’ll dive deep into the concept of epochs in neural networks. We’ll explore how the number of epochs impacts training convergence and how early stopping can be used to optimize model generalization. Neural Networks: A Brief Overview Neural networks are powerful supervised machine learning algorithms commonly used for solving classification or regression…