Tag: #TextRepresentation

-

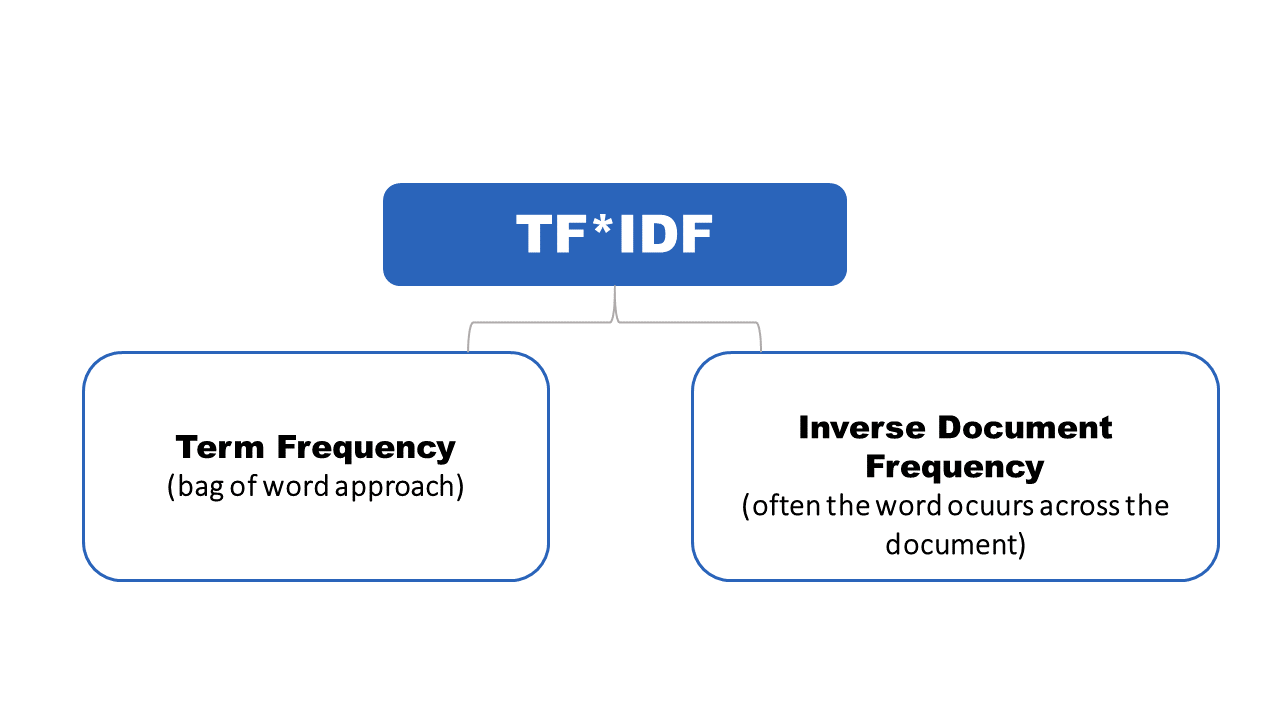

A Deep Dive into Text Classification with TF-IDF

Introduction: Unlocking the potential within textual data is a rewarding journey, and text classification, a cornerstone of Natural Language Processing (NLP), stands as a beacon in this exploration. In this blog post, we delve into the intricacies of text classification using Python, Pandas, NLTK, and scikit-learn. Our practical example revolves around travel and food-related sentences,…