Tag: #WordEmbeddings

-

Unveiling the Power of Word Embeddings with Gensim

In the realm of Natural Language Processing (NLP), word embeddings have emerged as a game-changer. Unlike traditional approaches that use words as features, word embeddings leverage dense, low-dimensional vectors to capture the meaning and usage of a word. One pioneering model in this domain is Word2Vec, developed by Thomas Mikolov and team at Google. In…

-

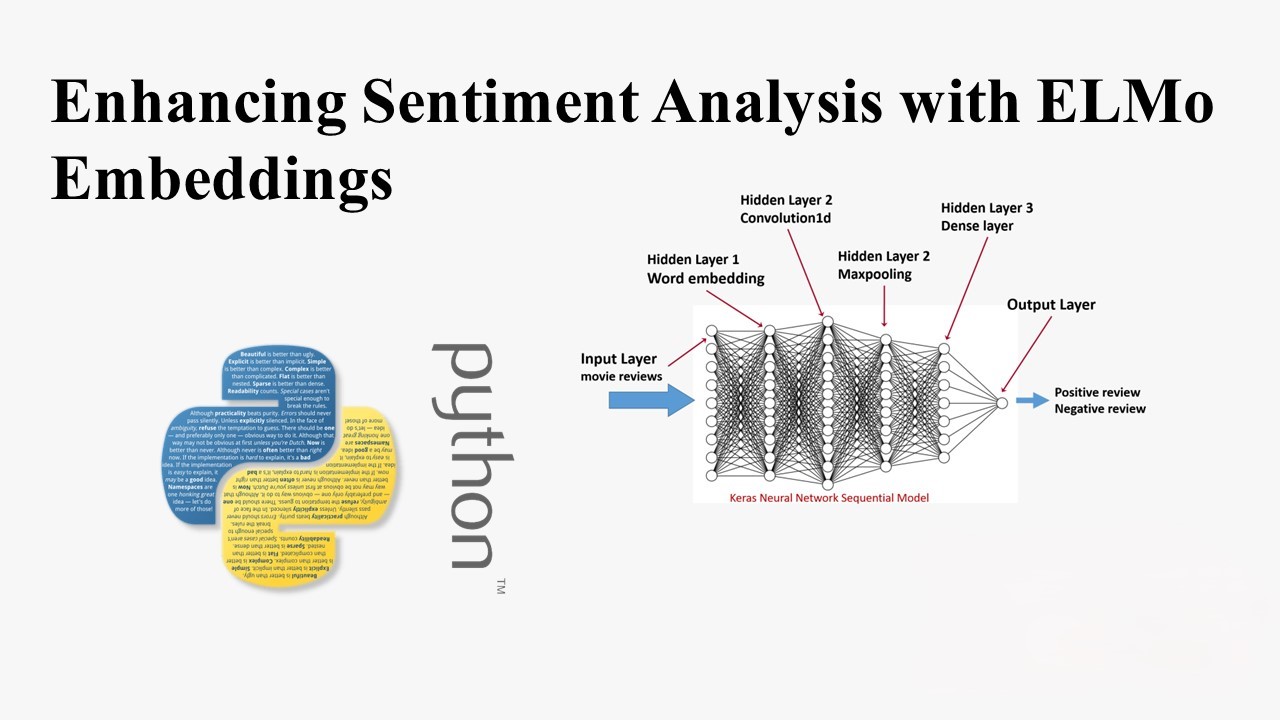

Enhancing Sentiment Analysis with ELMo Embeddings: A TensorFlow Experiment

Introduction Natural Language Processing (NLP) has witnessed a significant boost with the advent of transfer learning. In this blog post, we explore ELMo Embeddings, a cutting-edge approach to word embeddings, leveraging a large unlabelled text corpus for enhanced sentiment analysis. We’ll delve into the implementation using TensorFlow and TensorFlow Hub. Preparation Let’s start by setting…