In this tutorial, we’ll dive deep into the concept of epochs in neural networks. We’ll explore how the number of epochs impacts training convergence and how early stopping can be used to optimize model generalization.

Neural Networks: A Brief Overview

Neural networks are powerful supervised machine learning algorithms commonly used for solving classification or regression problems. However, building a neural network model involves making various architectural decisions and preprocessing steps.

What is an Epoch in Neural Networks?

An epoch refers to one complete cycle of training the neural network with all the training data. During an epoch, the neural network undergoes a forward pass (prediction) and a backward pass (error calculation and weight update) using all the training examples.

Neural Network Training Convergence

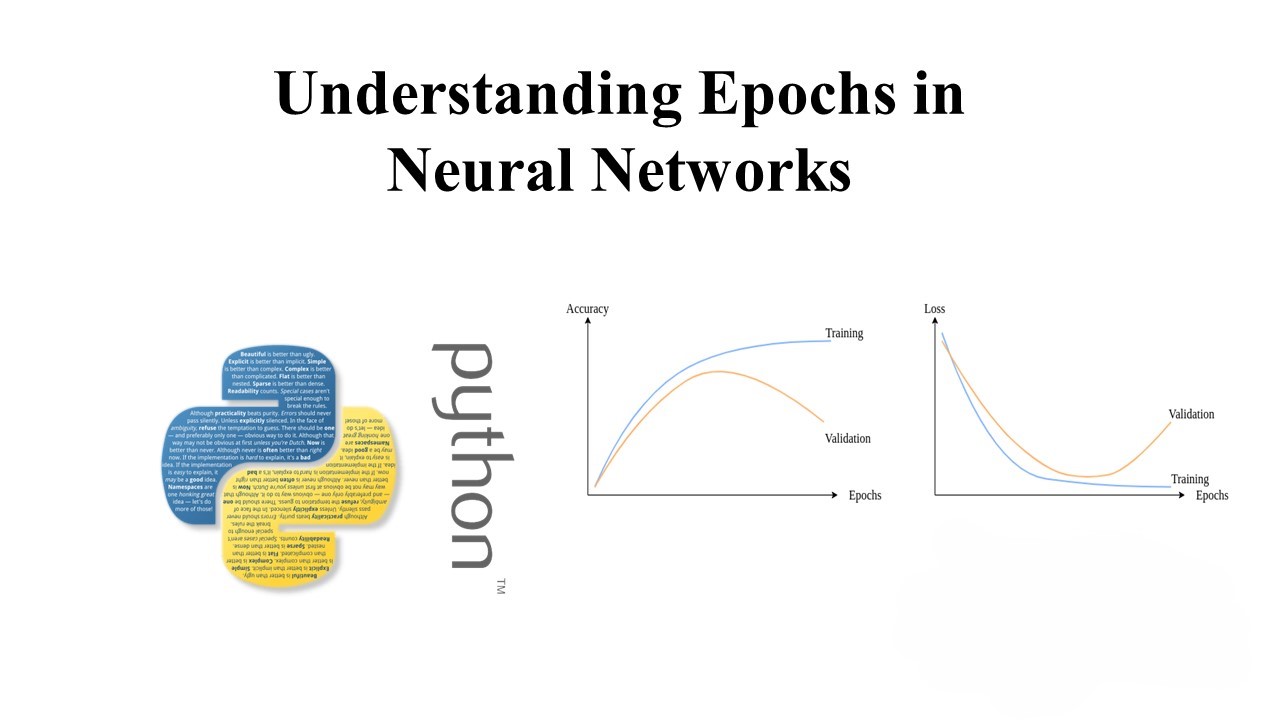

Achieving convergence during training is crucial to building a well-performing model. We aim to minimize error while ensuring the model generalizes well to new data. Overfitting (high variance) and underfitting (high bias) are common challenges in neural network training.

To monitor convergence, we often plot learning curves showing loss (or error) vs. epoch or accuracy vs. epoch. Ideally, we expect the loss to decrease and accuracy to increase with each epoch until stabilization.

The Role of Epochs in Model Training

Deciding the appropriate number of epochs is essential for training a neural network. Setting too few epochs may lead to underfitting, while setting too many may result in overfitting and wasted computational resources.

Early stopping is a practical solution to this dilemma. It involves stopping training when the model’s generalization error starts to increase, as evaluated on a validation set. By employing early stopping, we can prevent overfitting and optimize model performance.

Conclusion

Understanding the concept of epochs is crucial for effectively training neural network models. By carefully selecting the number of epochs and implementing early stopping, we can build models that strike the right balance between bias and variance, ultimately leading to better generalization performance.

Now, let’s take a look at a simple code snippet to illustrate the training process:

# Importing necessary libraries

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

# Define the neural network architecture

model = Sequential([

Dense(64, activation='relu', input_shape=(784,)),

Dense(64, activation='relu'),

Dense(10, activation='softmax')

])

# Compile the model

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# Train the model with a specified number of epochs

model.fit(X_train, y_train, epochs=20, validation_data=(X_val, y_val))

This code snippet demonstrates how to define and train a simple neural network using TensorFlow/Keras, specifying the number of epochs for training.

By understanding epochs and employing appropriate training strategies like early stopping, we can build neural network models that effectively learn from data and generalize well to unseen examples.

Leave a Reply